Create Account

How to make an Effective Survey

Effective surveys result from clarity about their purpose

The key to a great story is not who, or what, or when, but WHY.*

So too with surveys. The key to an effective survey is being clear about its purpose.

Surveys are a means to an end rather than an end in themselves. Why are you running this survey? What makes a survey effective is the extent to which it contributes to achieving that end.

For example, is the purpose to:

- reduce staff turnover and recruitment costs?

- change employee behaviour to be better able to deliver the new company vision?

- identify opportunties for process improvement and good practice to share?

- check if messaging is getting through to all the right people?

- something else?

Clear thinking about WHY is crucial to creating and running an effective survey.

Measurement is an act of selection

We don't always think about that measurement is an act of selection. By choosing to measure certain things, we lift them out of the background and draw attention to them. What we don't measure remains hidden. That's why asking the right questions is so important. By bringing certain things into focus, we also send subliminal messages.

Surveys are two way communication

Surprisingly perhaps, surveys are two-way communication. Of course they are about collecting information, but every survey also communicates more subtle meanings to everyone that sees it. It says: This is what we think is important, this is how professionally (or not) we go about our work. It says: This is the extent that we actually value you and seek to engage with you and include you in what we are doing.

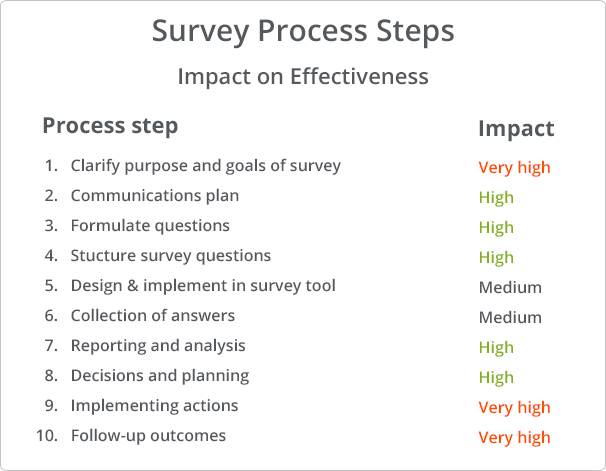

Survey Process Steps

In the overall survey process, several steps contribute in different ways to survey effectiveness.

The table sets out the process steps and their importance in creating effective surveys. The assessment of impact is somewhat subjective and varies by survey. The purpose of the table is really to highlight that making effective surveys is about more than entering questions in a survey tool and collecting answers.

The list format is not meant to imply that it's a strict sequence of events with a beginning and an end. It's an continuous process where the last step feeds back into the first. There are also iterations along the way, looping back to earlier steps.

1. Clarity about purpose, goals and scope

Unless we know what the purpose of a survey is, the notion of effectiveness ("doing the right things") is not meaningful. The task of policy makers and leaders is to set goals and direction, and to clearly to articulate them.

A plan for a survey can also include specific actions to be taken. For example, for an engagement survey, that each team will discuss their results and agree an action plan for next 6 months.

2. Ongoing communication and communication plan

You need to bring people with you, whether they be employees, customers or suppliers. When the plan is clear, tell them. Why is the survey being run? What will be happening, what is expected of participants and why their support is crucial. How will the collected information be used?

Plan the communication at the outset and make it an integral part of the survey process, rather than hastily cobbling together a cover note when the survey is ready to be sent out.

3. Formulating questions

This can be hard. Experience obviously helps. Some say its an art.

There is plenty of advice on how to write good questions, so we won't cover it here. It's still worth emphasising that it's crucially important to be brief and clear. Make sure that each question is about only one thing. Beware of words like "and" and "or".

Standard question sets

A word of caution also about the use of standard sets of questions. There are situations where standard sets of questions are necessary and useful, for example when assessing personality or learning styles. A standardised measurement tool ensures consistency and comparability across a wide range of respondents.

In other situations, standard sets of questions may not fit well with a particular environment. A typical example is in benchmarking, where standard questions are needed to ensure comparability of individual results with a benchmark. It's a dilemma when standard questions are a not well matched with how things are done in your organisation. If unique and important circumstances are not taken into account by standard questions, they may not be the right ones to use in your case. This requires some judgement and trade-offs.

4. Structuring the survey questions

Grouping similar questions together makes it easier for respondents to focus while also providing a useful structure for reporting. Giving the grouping a title also helps set the context. For example: My Company, My Team, My Manager, Communication.

It is common to see a single open question like "Is there anything else you'd like to tell us about" at the end of a survey. Instead, consider having such an open ended question at the end of each topic. This captures thoughts while they are still fresh in the respondent's mind and also pre-sorts them for reporting purposes. For example, you will automatically get a collection of comments about "Communication" or "My Team".

5. Implementing the survey in a survey tool

There are many survey tools available with varying capabilities and at different price points. Using them is no different than learning to use any other application. They allow varying levels of control of the survey taking experience and presentation. A well implemented, nice looking survey has a positive effect on the experience of the respondent. A poor experience reflects badly on the sender of the survey and can effect responses rates.

Testing the survey is always important and many survey tools have means to run tests and get feedback before launch. Remember to remove any test responses from the data!

6. Carrying out the collection

This is about smooth execution, being easy to use and reinforcing the brand. It's also another opportunity to communicate. Provided the basics work, this is not usually crucial to survey effectiveness.

7. Reporting and analysis

Once answers are in, data analysis and reporting can begin. You will have set the stage for reporting and analysis in earlier stages when you formulated questions and structured your survey.

All too often, there is a realisation at this stage that questions could or should have been asked differently. Or that some additional questions should also have been included.

ReportGorilla has a useful learning feature that allows you to examine and test reporting options BEFORE any data has been collected. This means that already during the survey design phase, you can realistically experience what reporting will be possible for your current survey. This way, you can adjust your survey design until you can get the reporting that is needed. We all learn through feedback. Even survey designers!

It's also a great way to engage decision makers early in the process and get their sign-off before the survey is launched. You can read more about Better Survey Design with Report Previews here.

8. Decision making and action planning

This is about making reports and insights available to the people that are going to make choices and decisions. In some cases it may the very people that have done the analysis, in which case it's tightly coupled with the previous step.

In other cases, it's a question of making appropriate reports available to the right people. What reports depends on what choices or decisions they are required to make. There are a range of different scenarios and possibilities. You can read about some reporting scenarios here

9. Implementing the action

Collected data is useless unless you do something with it. Similarly, reporting and analysis is useless unless it results in some action or change of behaviour. (Drawing insights from the analysis and deciding not to take action can be a valid course of action.)

The key here is to have given some thought to what activities will take place when survey results are available already in the survey plan. And of course there is the unexpected insight which you are more likely to come across if it is easy to create different kinds of reports.

For example, one company's process required each team leader to discuss results with their team and agree what actions to take. Since there was follow up action planned, team leaders had a strong incentive to encourage team members to respond to the survey.

10. Following up on outcomes - How did things change?

This final step in the cycle is to assess the impact of actions taken as a result of the survey. Have they had the desired effect? The interval and frequency of follow-up depends on the lead time for actions to filter through to changes in behaviour or greater efficiency. If changes take a month to have an effect, it makes sense sense to get feedback in that timeframe rather than to wait a year for next survey. Why wait?

It could involve running a subset of survey questions again to see if there has been a change against the baseline measurement. Reports showing trends and change are useful for this.

Continuous improvement

Finally, in the spirit of continuous improvement: In one organisation, analysis showed that teams that had actively worked with survey results the previous year showed significantly greater improvement than those that had not. Stands to reason.

To improve the effectiveness working with results, steps were taken to facilitate action planning. Guidelines were written for team leaders and a special report was created for each team that showed top and bottom results for each topic. This made it easier for teams to engage with survey findings, discuss and take constructive action.

A process insight along the way that helped improve effectiveness.

* The quote is from Elliot Carver, the news tycoon villain from the James Bond film Tomorrow Never Dies.